NetFlow Configuration Examples for Cisco Routers

Catalyst 4500 Series Switch IOS NetFlow Configuration

——————————————————————-

switch(config)# ip flow ingress

switch(config)# ip flow ingress infer-fields

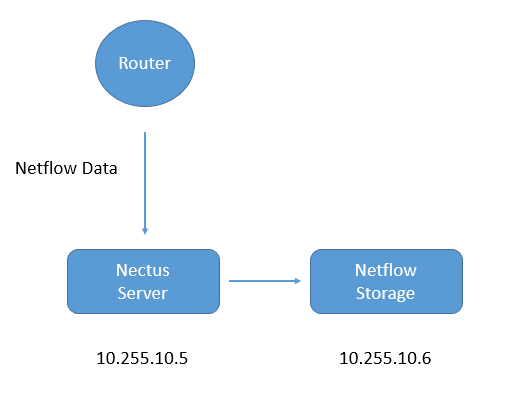

switch(config)# ip flow-export destination <Nectus IP address> 2055

switch(config)# ip flow-export source Loopback0

switch(config)# ip flow-export version 9

switch(config)# ip flow-cache timeout active 1

switch(config)# ip flow-cache timeout inactive 15

Cisco 3800 Series Router NetFlow Configuration

—————————-

Step 1. Define Flow Record format

router(config)# flow record NECTUS_NETFLOW_RECORD

router(config-flow-record)# description NetFlow record format to send to Nectus Netflow Collector

router(config-flow-record)# match ipv4 ttl

router(config-flow-record)# match ipv4 tos

router(config-flow-record)# match ipv4 protocol

router(config-flow-record)# match ipv4 source address

router(config-flow-record)# match ipv4 destination address

router(config-flow-record)# match transport source-port

router(config-flow-record)# match transport destination-port

router(config-flow-record)# match interface input

router(config-flow-record)# match flow direction

router(config-flow-record)# collect interface input

router(config-flow-record)# collect interface output

router(config-flow-record)# collect counter bytes

router(config-flow-record)# collect counter packets

router(config-flow-record)# collect timestamp absolute first

router(config-flow-record)# collect timestamp absolute last

router(config-flow-record)# collect routing source as

router(config-flow-record)# collect routing destination as

Step 2. Create Flow Exporter (Specify where NetFlow to be sent)

router(config)# flow exporter NECTUS_NETFLOW_EXPORTER

router(config-flow-exporter)# description Export NetFlow to Nectus

router(config-flow-exporter)# destination <Nectus IP address>

router(config-flow-exporter)# source Loopback0

router(config-flow-exporter)# transport udp 2055

router(config-flow-exporter)# export-protocol netflow-v9

Step 3. Create Flow Monitor (Bind Flow Record to the Flow Exporter)

router(config)# flow monitor NECTUS_NETFLOW_IPv4_MONITOR

router(config-flow-monitor)# record NECTUS_NETFLOW_RECORD

router(config-flow-monitor)# exporter NECTUS_NETFLOW_EXPORTER

router(config-flow-monitor)# cache timeout active 60

Step 4. Assign Flow Monitor to Selected Interfaces

Repeat this step for every interface you are interested collecting NetFlow for.

router(config)# interface TenGigE 1/1 (repeat for every interface that you need)

router(config-if)# ip flow monitor NECTUS_NETFLOW_IPv4_MONITOR input

router(config-if)# ip flow monitor NECTUS_NETFLOW_IPv4_MONITOR output

Step 5. Operation Validation

show flow record NECTUS_NETFLOW_RECORD

show flow monitor NECTUS_NETFLOW_IPv4_MONITOR statistics

show flow monitor NECTUS_NETFLOW_IPv4_MONITOR cache